Author

Abstract

This is a short tutorial on backpropagation and its implementation in Python, C++, and Cuda. The full codes for this tutorial can be found here.

Feed Forward

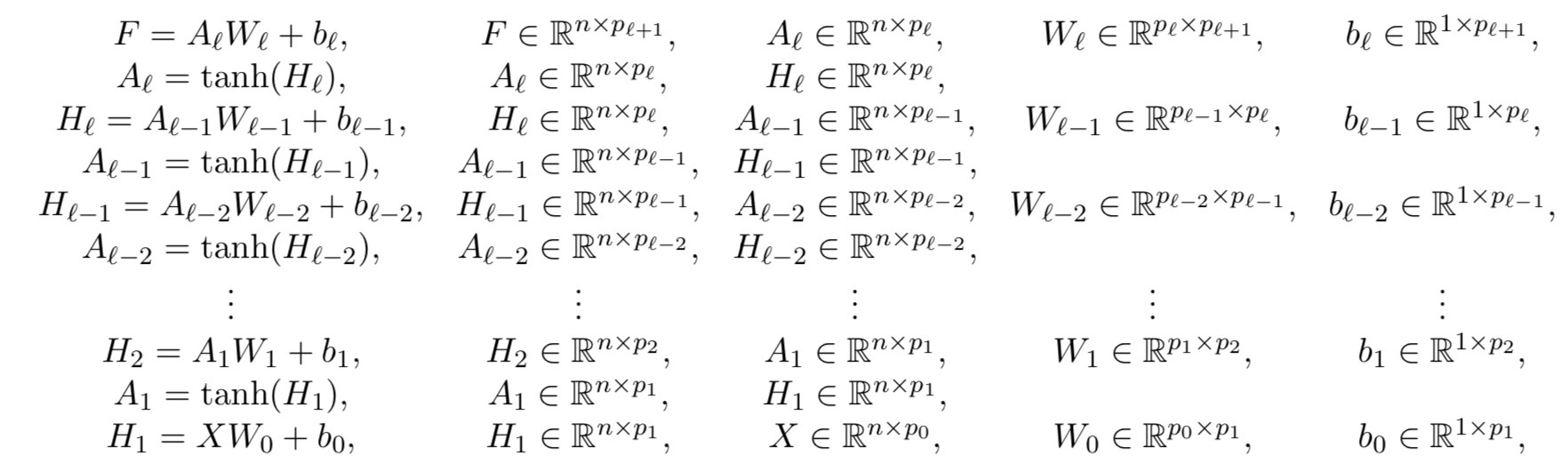

Let us consider the following densely connected deep neural network

taking as input and outputting . Here, denotes the number of data while the weights and biases represent the parameters of the neural network. Moreover, let us focus on the sum of squared errors loss function

where corresponds to the output data.

Back Propagation

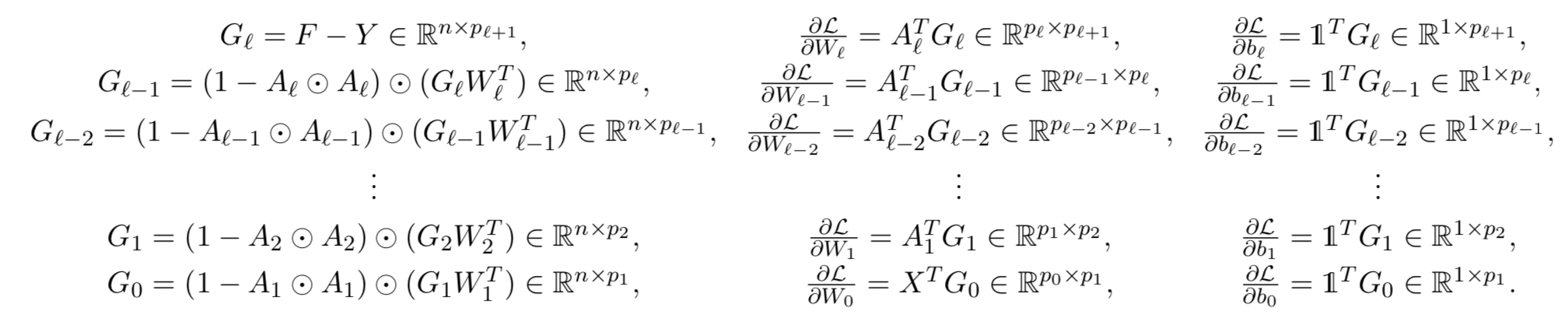

The gradient of with respect to is then given by

Using chain rule, the gradient of with respect to is given by

and the gradient of with respect to is given by

where is a matrix filled with ones. The gradient of with respect to is given by

Consequently, the gradient of with respect to , denoted by , is given by

Here, we are using the fact that the derivative of with respect to is given by . Moreover, denoted the point-wise product between two matrices. The above procedure can be repeated to give us the backpropagation algorithm

Moreover, the gradient of with respect to is given by

All data and codes are publicly available on GitHub.